Fake news, the DNA of tech firms: Gearing up for the 2019 polls

Social media companies seem quite serious about removing false news and propaganda from their platforms.;

Before the advent of social media, the only time we interacted with and heard from elected representatives was during electioneering. TV channels and newspapers were our only sources for information on happenings in the country but “public opinion” was largely missing in the overall discourse.

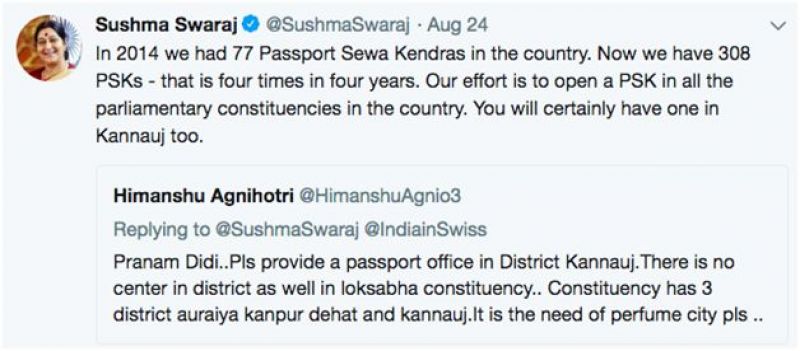

The internet and social media overhauled the paradigm of people gaining access to news around them and how they interact with elected representatives. Today, it’s really hard for politicians to make promises and escape public scrutiny when they fail to deliver. Social media will constantly remind politicians of their promises and pin them down to public scrutiny. For instance, if a politician promised “Cheaper electricity” during election campaigns, #CheapElectricityWhen is going to trend all over social media until the next elections.

Social media has made our politicians more accountable and has been a great boost for democracy. (This is one reason why most non-democratic governments ban social media platforms in their countries). However, social media does have a dark side. It turns out that social media can also be used to spread false propaganda and to sabotage the public narrative. It started with simple spamming and trolling, but has quietly achieved shocking levels of sophistication.

As revealed by the Cambridge Analytica scandal, there are deliberate attempts being made to change the way you think and to alter your political inclination. It gets even scarier when foreign countries get involved in using social media to influence citizens of other countries and to interfere with their elections.

So, what are the social media companies doing?

Social media companies seem quite serious about removing false news and propaganda from their platforms. Following are some steps:

- Limiting WhatsApp forwards to five people and labelling forwards: This means lot less circulation of any type of content over WhatsApp, including fake news and memes. This retards the spread of fake news, giving it a chance of getting identified and taken down. Previously, people were able to share anything they received, to their entire phonebook, which meant anything could go viral in minutes. Given that the percentage of bad actors is always smaller than people using the platform to connect with their friends and family, WhatsApp is seeing a lot of negative reviews from its users.

- Political ad labelling and revealing all ads being run by any FB page: Facebook has started to label all political ads along with information on who is funding those ads. This means users are more conscious of the ads they are seeing and can decide to opt out. Now, one can also see all ads that any Facebook page is running. However, this isn’t something most business pages appreciate.

- Limiting viral video circulation: Mark Zuckerberg decided to cut down on the distribution of viral content on his platform and prioritise content from people’s trusted networks. While this helps limit the rate at which malicious content spreads, it also limits the reach of pages. It translates into much lesser time spent by users on Facebook, and hence lower ad revenues.

- Educating users about fake news: Twitter and Facebook have actively used their platforms to educate users about how to check fake news and how to report hate speeches. This means users are more aware that they could be engaging with fake news.

What more can be done?

- Labelling fake news and a searchable fake and verified news archive: Quite often, fake news resurfaces and goes viral multiple times. Hence, it’s important to let the fake news that’s clearly marked as “fake news” also make its way to people. It will help people identify fake news even when it comes from a different source. A searchable archive of fake news can also act as a source for news validation.

- Reddit style public moderation: Reddit has done a great job of maintaining a high quality of news shared on its platform through a combination of automated rules, strict policies and crowd-sourced content moderation. Social networks like Facebook, Quora and Twitter can learn from Reddit and implement similar models.

What can you do to ensure you are not a victim of fake news or propaganda?

- Do a quick google search: Whenever you come across a political meme, video or an article that you wish to forward, do a quick google search on the related keywords. If you don’t find any major news publications reporting similar information, it’s most likely fake news.

- Report: Whenever you suspect malicious content on the internet, do report it so that social media companies can review the content. If we all start reporting fake news, it’ll clean up the platforms fairly quickly.

- Check public opinions: More often than not, someone would eventually catch the fake news and mention the same in conversations. So check the same content on public opinion platforms like Reddit, Wishfie and Twitter and follow the conversations around those topics. There’s no denying the fact that the bad actors still exist and they will try their best to interfere with Indian elections through social media. But I expect that it’ll be lot harder for them this time around because of the following factors:

- In 2014, there was little or no awareness about interference in elections through social media and hence the abuse went totally unchecked. It’s clear that bad actors were actively leveraging social media to influence voters in 2014 too. Facebook had 100 million+ Indian users at that time.

- Prior to 2014, quite a few developers including Cambridge Analytica had access to Facebook data through its platform program. Facebook has closed that program and made it impossible for developers to get access to any Facebook data without users explicitly opting in.

So, bad actors can’t have the same level of access to users and their data as they had during the previous elections. In addition, they are being hunted down quite actively. This makes one believe that 2019 elections are going to be relatively cleaner.

(The author is the co-founder and CEO of Wishfie, a video-based social network. Previously, he was a Facebook employee)